Contents

AI shouldn’t offload cognitive labor onto collaborators.

AI has made it remarkably easy to generate content at work.

Strategy memos. Research summaries. Brainstorms. Competitive analyses.

In minutes, you can produce pages of text that look substantial — thorough, researched, impressive.

And to be clear: teams should be using AI. Anyone ignoring it is falling behind.

But somewhere along the way, we’ve started confusing output with contribution.

Generating volume isn’t contribution if it offloads the cognitive labor of synthesis onto everyone else.

That’s the real risk in the AI era.

Cognitive Load Is the Constraint

Human attention is finite.

Working memory, the mental space where we process and connect ideas, can only hold a handful of meaningful elements at once. When we overwhelm working memory, performance drops. Priorities blur. Decisions slow. Insight degrades.

AI, meanwhile, has no such constraint. It can generate content endlessly.

The friction appears when human processing limits collide with machine-scale output. When long, unfiltered AI documents circulate without interpretation, the issue isn’t that the information exists. It’s that someone else now has to sort through it.

What was intended as productivity becomes additional processing work.

The Anti-Pattern: Raw AI Output

Cognitive labor is the work of turning information into meaning. That means deciding:

- What matters most?

- What can be ignored?

- What decision does this inform?

- What do we recommend?

AI can surface patterns and assemble data. What it cannot do is determine strategic relevance in context. That still requires judgment.

When someone shares a 15-page AI-generated document with no synthesis, the implicit message becomes:

“Here’s everything. You figure out what matters.”

That doesn’t lighten the team’s workload. It redistributes it.

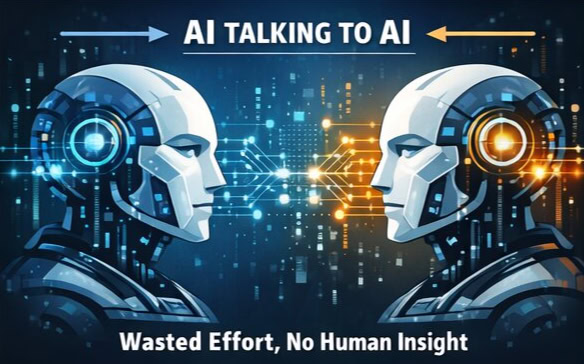

When AI Starts Talking to AI

There’s another pattern emerging that’s even more telling.

One person uses AI to generate a massive document.

The recipient feeds it into their own AI to summarize it.

Now we’ve created a loop: AI generating bulk content so another AI can compress it, while the human layer never fully engages.

If your collaborator has to run your document through AI just to extract the takeaway, the work wasn’t complete.

The value was never in the volume. It was in the interpretation.

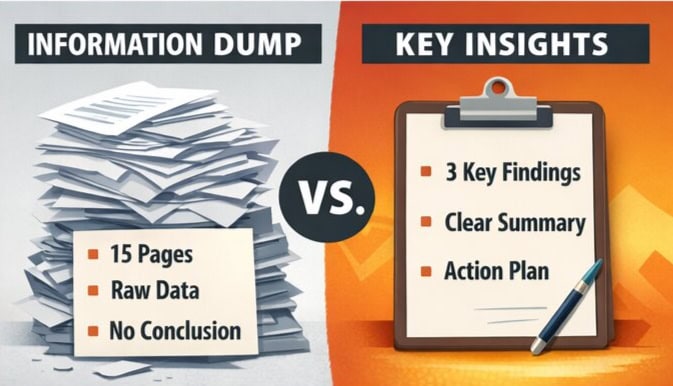

A Simple Example

Raw AI dump:

“Here’s a 12-page AI overview of our competitors’ marketing strategies.”

No context. No recommendation. No prioritization.

Now multiply the reading, filtering, and decision-making effort across everyone who receives it.

That’s cognitive labor scaled across the team.

Synthesized contribution:

“I used AI to analyze competitor positioning. Three patterns stood out:

- Everyone emphasizes speed, not trust

- No one addresses mid-market complexity

- Video is emerging as the differentiator

Recommendation: We double down on trust and clarity in our next campaign.

Full notes linked below.”

Same tool. Different standard.

In our second example, AI accelerated the analysis. The human distilled the insight.

That distinction matters.

This Is a Work Design Issue

As a Chief Work Officer, I want my team using AI aggressively. It expands capability, speeds up research, and lowers the cost of iteration.

But when production becomes effortless, discernment becomes the differentiator.

High-performing teams treat attention as a shared resource. They don’t waste it. They don’t flood it. They protect it.

That means rewarding clarity over volume, and synthesis over documentation.

AI doesn’t remove the responsibility to think. It raises the expectation that you will.

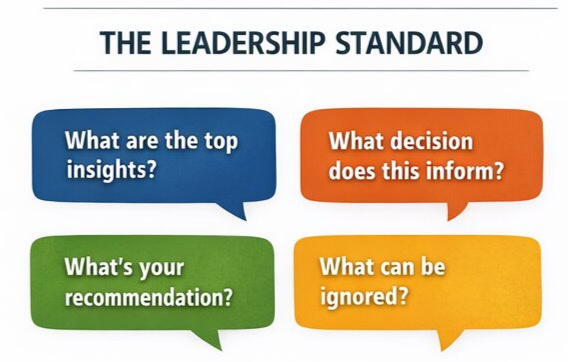

The Standard

Before sharing AI-assisted work, pause and answer:

- What are the top 1–3 insights?

- What decision does this inform?

- What do I recommend?

- What can safely be ignored?

If those answers aren’t clear, the work isn’t done.

The standard shouldn’t be:

“Look what AI produced.”

It should be:

“Here’s what it means.”

That’s the human layer.

That’s the leadership layer.

And that’s where real contribution still lives.